Build your own agent from scratch with LLMFlows

A step-by-step guide to building LLM-powered agents

What are agents?

In recent years, the rise of large language models (LLMs) has been nothing short of remarkable. However, despite their impressive abilities, LLMs have limitations, particularly in tasks involving mathematical calculations, logical reasoning, or accessing up-to-date information.

Agents are programs that include one or multiple LLMs that can invoke traditional functions (often called tools) to enhance their capabilities. By incorporating these tools, agents can leverage the strengths of LLMs while compensating for their weaknesses. This is achieved by prompting the LLMs with specific text that outlines the available tools and demonstrates how they can be used. As a result, agents can utilize calculators, API calls, and web search results to enhance their performance in areas where LLMs typically struggle.

To illustrate this concept, let's take a look at a simple example of such a prompt:

system_prompt = """

You are a 150IQ assistant that answers questions.

To answer the questions you have access to the following tools:

- search

- calculator

You can use tools in the following way:

- search: [Who is Albert Einsten?]

- calculator: [2 + 3]

"""Every time the LLM generates text based on the rules above, we can parse the text to extract the function name and the function argument (e.g., "search" and "Who is Albert Einstein?”) and invoke the respective function. Then we can evaluate the result of the function and add it to the conversation history, so the LLM can use it the next time it generates text.

ReAct Agents

One popular agent architecture is the ReAct architecture. In this paradigm, the agent runs in a loop of reasoning, taking action, and observing the outcome to solve a problem:

The agent starts by reasoning how it can solve the appointed task and specifies an action based on the reasoning.

The agent invokes a function corresponding to the action, and the output of this function makes up the observation.

Once it has the observation, the agent starts the reasoning phase again, and the loop continues until the agent decides it has all the required information and generates a final answer.

Here is an example:

Question: What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into?

---

Thought: I need to search Colorado orogeny, find the area that the eastern sector of the Colorado orogeny extends into, then find the elevation range of the area.

---

Action: wikipedia: Colorado orogeny

---

Observation: The Colorado orogeny was an episode of mountain building (an orogeny) in Colorado and surrounding areas.

---

Thought: It does not mention the eastern sector. So I need to look up eastern sector.

---

Action: wikipedia: eastern sector

---

Observation: (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny.

---

Thought: The eastern sector of Colorado orogeny extends into the High Plains. So I need to search High Plains and find its elevation range.

---

Action: wikipedia: High Plains

---

Observation: The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m).[3]

Thought: High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft.

---

Action: final answer: 1,800 to 7,000 ftBuilding your own agent

ℹ️ Info

The code for this guide is available in Google Colab.

If you want to code along as you read, you can install LLMFlows using pip:

pip install llmflowsTo check the source code and other examples, visit our Github repository. The official documentation and user guides are available at llmflows.readthedocs.io.

Let's build a simple version of the ReAct agent. To keep things tidy, we will create three separate files:

tools.py, where we will define the tools functions.

prompts.py to store all the prompts

agent.py, where we will implement the agent flow.

Implementing the tools

We will start by specifying the tools the agent will have access to. To keep it simple, let's create two tools in tools.py - a calculator and a Wikipedia search tool.

def calculator_tool(calc):

"""

A simple calculator tool that uses eval() to calculate

the result of a given expression.

"""

return "Observation: the calculation result is " + str(eval(calc))The calculator tool is just a simple function that gets a string representing an expression and then returns the observation with the result of the expression.

And here is the code for the Wikipedia tool:

def wikipedia_tool(query: str) -> str:

"""

Retrieves the summary of a Wikipedia article for a given query.

Args:

query: A string representing the title of a Wikipedia article.

Returns:

A string representing the summary of the article, or a preset

string if the article is not found.

"""

try:

wikipedia_page = wikipedia.page(query)

return f"Observation: {wikipedia_page.summary}"

except:

return "Observation: The search didn't return any data"ℹ️ Info

To run this function, you will need to install the pymediawiki package:

pip install pymediawikiGreat! Now we have two tools that our agent can use. Let's move to the exciting part - building the actual agent.

Implementing the agent

LLMFlows is a framework for building simple, explicit, and transparent LLM applications such as chatbots, question-answering systems, and agents.

At its core, LLMFlows provides a minimalistic set of abstractions that allow you to utilize LLMs, prompt templates and vector stores and build well-structured apps represented as flows and flow steps. You can learn more (although it is unnecessary for following this post) in our introductory post.

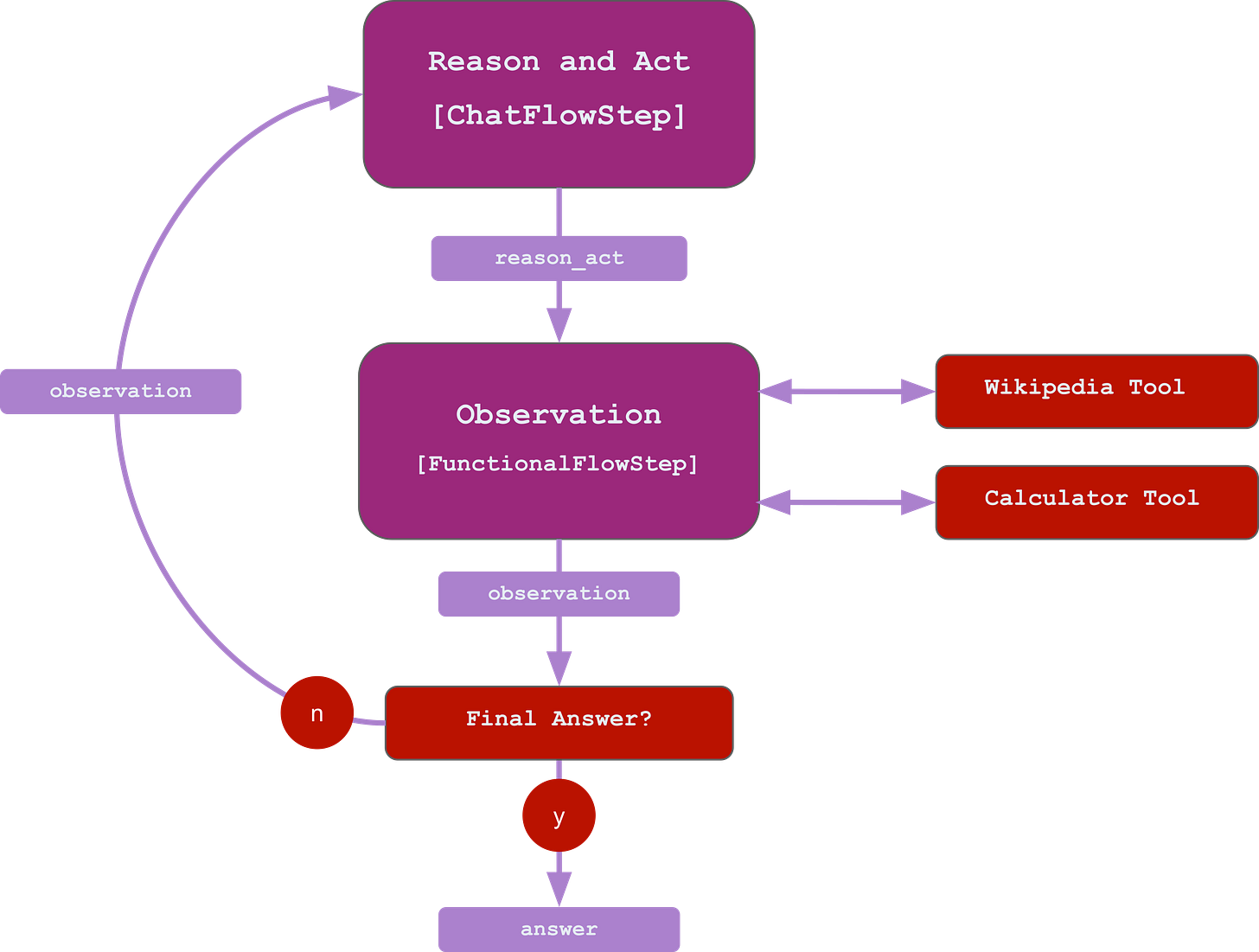

In LLM Flows, an agent can be represented as a flow that runs in a loop. We can implement the ReAct architecture by defining two flow steps:

We can create a

ChatFlowStepthat uses theOpenAIChatLLM to generate a thought and an action.When we generate the thought and action, we can invoke the correct function by using a

FunctionalFlowStep.

Finally, once we get the result(observation) from the function, we can evaluate it and continue the loop.

Here is what the agent loop looks like:

For the first step, we will use the OpenAIChat LLM, which supports a system prompt. System prompts are useful when we want to specify the behavior of the LLM and we can use it to define the ReAct rules.

Let's add it in prompts.py:

system_prompt = """

You are a 125IQ AI Assistant that answers questions by using tools. You run in a loop of Thoughts, Actions, and Observations.

You always start with a Thought and action.

At the end of the loop you output an Answer.

Use Thought to describe your thoughts about the question you have been asked.

Use Action to run one of the actions available to you - then return PAUSE.

Observation will be the result of running those actions.

Your available actions are:

calculator:

e.g. calculator: (5 * 3) + 1

A calculator that accepts a python expression and returns the result of the calculation.

wikipedia:

e.g. wikipedia: Jimmy Hendrix

Searches wikipedia and returns a summary

Examples:

---

Question: What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into?

Thought: I need to search Colorado orogeny, find the area that the eastern sector of the Colorado orogeny extends into, then find the elevation range of the area.

Action: wikipedia: Colorado orogeny

Observation: The Colorado orogeny was an episode of mountain building (an orogeny) in Colorado and surrounding areas. [not written by you]

Thought: It does not mention the eastern sector. So I need to look up eastern sector.

Action: wikipedia: eastern sector

Observation: (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny. [not written by you]

Thought: The eastern sector of Colorado orogeny extends into the High Plains. So I need to search High Plains and find its elevation range.

Action: wikipedia: High Plains

Observation: High Plains refers to one of two distinct land regions [not written by you]

Thought: I need to instead search High Plains (United States).

Action: wikipedia: High Plains (United States)

Observation: The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m).[3] [not written by you]

Thought: High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft.

Action: final answer: 1,800 to 7,000 ft,

---

Question: Which documentary is about Finnish rock groups, Adam Clayton Powell or The Saimaa Gesture?

Thought: I need to search Adam Clayton Powell and The Saimaa Gesture, and find which documentary is about Finnish rock groups.

Action: wikipedia: Adam Clayton Powell

Observation: Could not find [Adam Clayton Powell]. Similar: [’Adam Clayton Powell III’, ’Seventh Avenue (Manhattan)’, ’Adam Clayton Powell Jr. State Office Building’, ’Isabel Washington Powell’, ’Adam Powell’, ’Adam Clayton Powell (film)’, ’Giancarlo Esposito’].

Thought: To find the documentary, I can search Adam Clayton Powell (film).

Action: wikipedia: Adam Clayton Powell (film)]

Observation: Adam Clayton Powell is a 1989 American documentary film directed by Richard Kilberg. The film is about the rise and fall of influential African-American politician Adam Clayton Powell Jr.[3][4] It was later aired as part of the PBS series The American Experience.

Thought: Adam Clayton Powell (film) is a documentary about an African-American politician, not Finnish rock groups. So the documentary about Finnish rock groups must instead be The Saimaa Gesture.

Action: final answer: The Saimaa Gesture

---

Rules:

- Stop writing after specifying an action;

- You never write the observations - observations are provided to you by the tools;

- Always write a thought after an observation;

- Always write an action after a thought;

"""In short, here is what the system_prompt contains:

We start by specifying the behavior and describe the ReAct architecture - explaining that the agent uses thoughts, actions, and observations to solve a problem.

We described the two tools - wikipedia search and calculator, and we explained how the agents can invoke it.

We continue by providing two example sessions so we can utilize the in-context learning capabilities of the LLMs.

We finish by specifying some rules to make sure the produced text is consistent with the ReAct architecture.

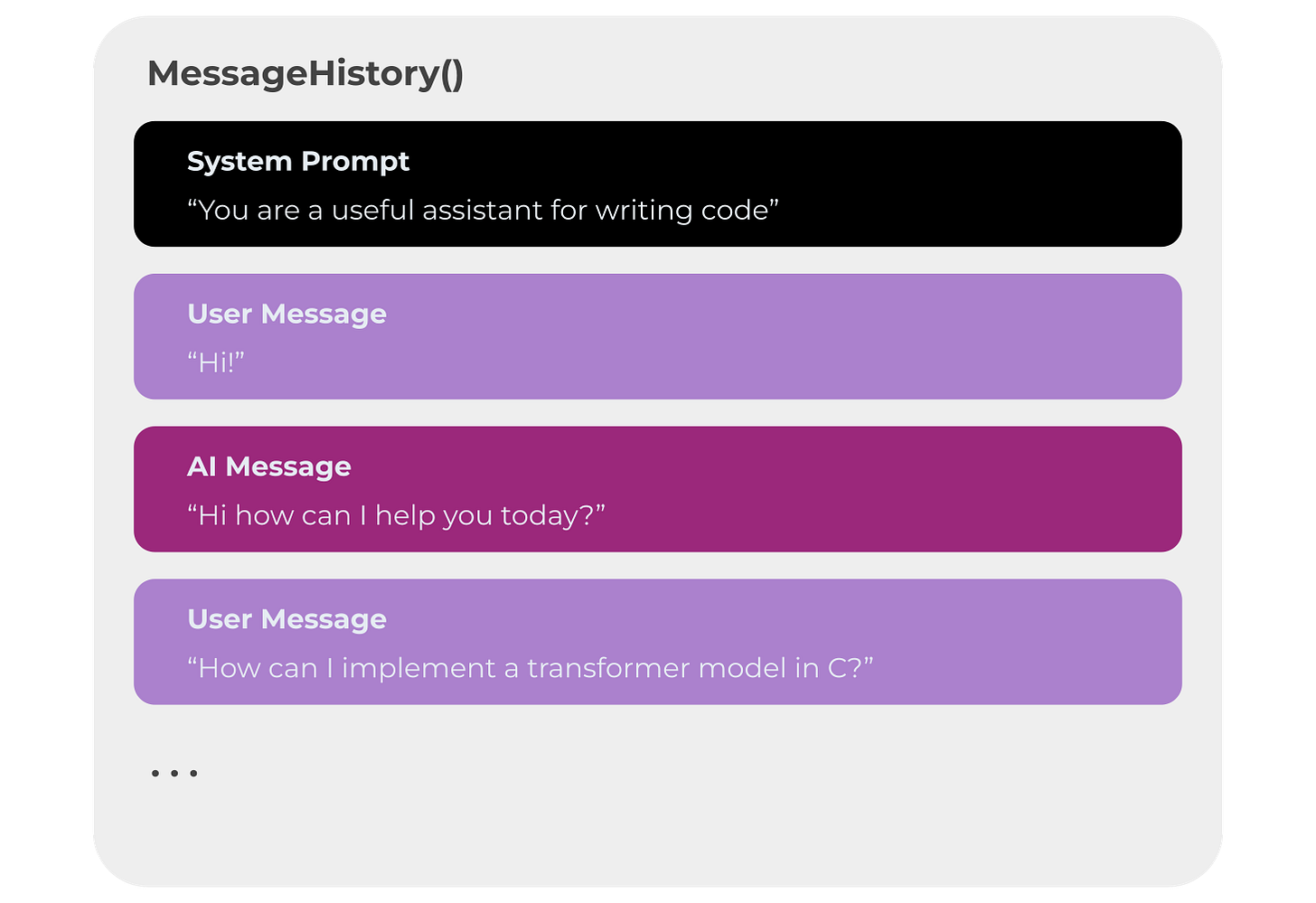

OpenAI’s chat LLMs require message history. As a reminder here is how the message history works:

For more information, see the official chat completions API guide from OpenAI and the documentation for LLMFlows’ MessageHistory() class.

Let's create the message history and include the system prompt:

message_history = MessageHistory()

message_history.system_prompt = system_promptThe system prompt specifies the behavior of the agent, but we also need to create a message prompt that we will use to pass the question and the reasoning history to the agent.

In prompts.py:

react_prompt_template = PromptTemplate("Question: {question}\n{react_history}")Now we can create the "Reason + Act FlowStep" in agent.py:

thought_action = ChatFlowStep(

name="Thought + Action Step",

llm=OpenAIChat(api_key=open_ai_key, model="gpt-4"),

message_history=message_history,

message_prompt_template=react_prompt_template,

message_key="question",

output_key=f"thought_action",

)ℹ️ Info

Please note that we are using gpt-4 as a model for the ChatLLM since agents perform very poorly with the gpt-3.5 family of models.

To summarize:

So far, we created a chat flow step that will generate the thought and action from the ReAct architecture.

The chat flow step utilizes a Chat LLM.

A chat LLM utilizes a message history, as shown in the figure above.

The message history can include a system prompt specifying the agent's behavior, which we use to describe the ReAct architecture.

To generate a thought and an action, we also provide a message_prompt_template which will give the initial question to be answered and the history of reasoning within the message history.

Our next stop is the "Observation" step. In this step, we will use the output of the previous step to invoke the right tool that the agent wants to use.

To do that, we can use LLMFlows’ FunctionalFlowStep and pass a function that will parse the previous output based on the specifications in the system_prompt.

In tools.py:

def tool_selector(thought_action: str) -> str:

"""

Invokes a tool based on the action specified in the agent output

"""

if "final answer:" in thought_action:

return "<final_answer>"

elif "wikipedia:" in thought_action:

print("Using wikipedia tool:")

question = thought_action.split("wikipedia:")[1].strip()

return wikipedia_tool(question)

elif "calculator" in thought_action:

print("Using calculator tool:")

calc = thought_action.split("calculator:")[1].strip()

return calculator_tool(calc)

else:

return "<invalid_action>"This function will receive the output of the previous step, parse the string, and invoke a function based on the contents of the string.

Now we can define the FunctionalFlowStep in agent.py:

observation = FunctionalFlowStep(

name="Observation Step",

flowstep_fn=tool_selector,

output_key="observation",

)

To summarize:

we created a functional flow step to invoke a function based on the output of the chat flow step

we have a tool_selector function that is invoked within the functional flow step

the tool selector parses the string output of the chat flow step, calls the function, and passes the arguments

the result of the function is the observation.

Now we can connect the two flow steps and create the flow:

# Connect flow steps

thought_action.connect(observation)

# Create the flow

react_agent_flow = Flow(thought_action)Finally, we can create the agent loop:

problem = "What is the age difference between Barak Obama and Michelle Obama?"

max_steps = 10

react_history = ""

for i in range(max_steps):

result = react_agent_flow.start(

question=problem, react_history=react_history, verbose=True

)

if result["Observation Step"]["generated"] == "<final_answer>":

break

else:

# add the thought, action and observations to the history

react_history += (

result["Thought + Action Step"]["generated"]

+ result["Observation Step"]["generated"]

+ "\n"

)Here is what is happening in our loop:

We start by specifying the question we want the agent to answer and empty reasoning history.

We run the flow which produces thought, action, and observation

We evaluate the observation.

If the observation is not a final answer, we update the reasoning history and run the flow again

If the observation contains the final answer, we break the loop.

We are ready to run our agent! Let's start it and see what happens!

python agent.pyThought + Action Step:

Thought: To answer this question, I need the birth dates of both Barack Obama and his wife, Michelle Obama. I'll start with Barack's birth date.

Action: wikipedia: Barack Obama

Using wikipedia tool:

Observation Step:

Observation: Barack Hussein Obama II ( (listen) bə-RAHK hoo-SAYN oh-BAH-mə; born August 4, 1961) is an American politician who served as the 44th president of the United States from 2009 to 2017. A member of the Democratic Party, he was the first African-American president of the United States. Obama previously served as a U.S. senator representing Illinois from 2005 to 2008 and as an Illinois state senator from 1997 to 2004, and worked as a civil rights lawyer and university lecturer. Obama was born in Honolulu, Hawaii. After graduating from Columbia University in 1983, he worked as a community organizer in Chicago.

Thought + Action Step:

Thought: Barack Obama was born on August 4, 1961. Now I need to find out when Michelle Obama was born.

Action: wikipedia: Michelle Obama

Using wikipedia tool:

Observation Step:

Observation: Michelle LaVaughn Robinson Obama (born January 17, 1964) is an American attorney and author who served as the first lady of the United States from 2009 to 2017, being married to former president Barack Obama. Raised on the South Side of Chicago, Obama is a graduate of Princeton University and Harvard Law School. In her early legal career, she worked at the law firm Sidley Austin where she met Barack Obama. She subsequently worked in nonprofits and as the associate dean of Student Services at the University of Chicago as well as the vice president for Community and External Affairs of the University of Chicago Medical Center. Michelle married Barack in 1992, and together they have two daughters.

Thought + Action Step:

Thought: Michelle Obama was born on January 17, 1964. Now that I have both birth dates, I can calculate the age difference.

Action: calculator: (1964 - 1961) * 12 + (1 - 8)

Using calculator tool:

Observation Step:

Observation: the calculation result is 29

Thought + Action Step:

Thought: The age difference between Barack and Michelle Obama is 29 months, which is approximately 2.42 years.

Action: final answer: The age difference between Barack and Michelle Obama is approximately 2.42 years.

Observation Step:

<final_answer>Isn't this exciting? The agent even decided to go for a more complex calculation and include their birth months. Even better is that our results contain all the information you need to determine what happened at each step, making our agent transparent and easy to debug!

Conclusion

In this post, we discussed what agents are and what problems they solve. We reviewed the basics and discussed a popular agent architecture - the ReAct architecture. Then we created our own tools and our own ReAct agent by using LLMFlows.

Feel free to play with the code and add extra tools by creating more functions and extending the system prompt. Let us know in the comments if you make a more complex agent that can solve interesting problems.

If you liked this post, consider subscribing so you can get notified as soon as the following posts are out.

If you like LLMFlows please consider starring the repository, sharing it with friends or on social media. And if you are considering contributing, check our contributing section in GitHub.

Meanwhile, if you want to learn more before our next post, feel free to check out the user guide.

Thank you for taking the time to read this post!

Very cool to see an alternative approach to Agents. Thanks!